The Blockchain Debate Podcast

The Blockchain Debate Podcast

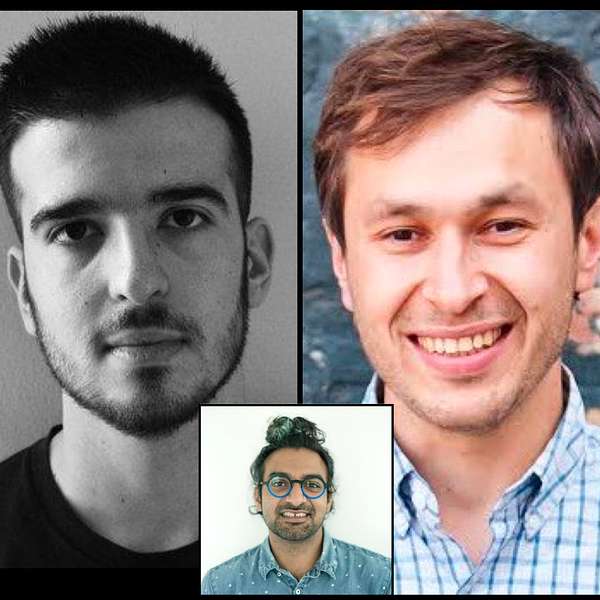

Motion: Scalability is impossible without sharding and layer-2 solutions (Georgios Konstantopoulos vs. Anatoly Yakovenko, cohost: Tarun Chitra)

Guests:

Georgios Konstantopoulos (@gakonst)

Anatoly Yakovenko (@aeyakovenko)

Hosts:

Richard Yan (@gentso09)

Tarun Chitra (@tarunchitra, special co-host)

Today’s motion is “Scalability is impossible without sharding and layer-2 solutions.”

Today’s discussion is highly technical in nature and I’m glad to have Tarun as my co-pilot. Between the two debaters, one is building a public chain that insists on achieving scaling without sharding, and the other is an independent consultant with extensive experience with various chains, including many layer-2 solutions.

My co-host Tarun’s summary at the end was quite apt - it’s easy to pull a carriage with one horse, but can you pull a carriage with 1024 chickens? The debate seems to partially come down to how powerful the machines can get to sustain the ever-growing network activities without resorting to quote unquote, fancy techniques like sharding and layer-2 solutions.

If you’re into crypto and like to hear two sides of the story, be sure to also check out our previous episodes. We’ve featured some of the best known thinkers in the crypto space.

If you would like to debate or want to nominate someone, please DM me at @blockdebate on Twitter.

Please note that nothing in our podcast should be construed as financial advice.

Source of select items discussed in the debate:

- Solana: https://solana.com/

- Georgios: https://www.gakonst.com/

- The Gauntlet: https://gauntlet.network/

Welcome to another episode of The Blockchain Debate Podcast, where consensus is optional, but proof of thought is required. I'm your host, Richard Yan. Today's motion is: Scalability is impossible without sharding or off-chain solutions. Today's discussion is highly technical in nature, and I'm glad to have Tarun as my copilot. Between the two debaters, one is building a public chain that insists on achieving scaling without sharding; and the other is an independent consultant with extensive experience with various chains, including many layer-2 solutions. Any summary by an insufficiently technical person would do no justice to the debate. So I won't attempt so here. If you're into crypto and like to hear two sides of the story, be sure to also check out our previous episodes. We featured some of the best known thinkers in the crypto space. If you would like to debate or want to nominate someone, please DM me@blockdebate on Twitter. Please note that nothing in our podcast should be construed as financial advice. I hope you enjoy listening to this debate. Let's dive right in! Welcome to the debate. Consensus optional, proof of thought required. I'm your host, Richard Yan. Today's motion: Scalability is impossible without sharding or off-chain solutions. To my metaphorical left is Georgios Konstantopoulos, arguing for the motion. He agrees that scalability is impossible without sharding or off-chain solutions. To my metaphorical right is Anatoly Yakovenko, arguing against the motion. He disagrees that scalability is impossible without sharding or off-chain solutions. And to my metaphorical middle, today's show is a bit different as you'll see, is my special co-host Tarun Chitra, who will be moderating the debate with me. Given his technical expertise, he'll be doing most of the questions, Georgios, Anatoly, Tarun: I'm super excited to have you join the show. Welcome!

Tarun:Glad to be back.

Anatoly:Hi, wonderful to be here.

Georgios:Thank you for having us.

Richard:Perfect. Here's a bio for the two debaters. Georgios is an independent consultant operating in the intersection of cryptocurrency security, scalability and interoperability. His current focus is in layer-2 scaling solutions, zero knowledge proofs, and the emerging diva ecosystem. Anatoly is founder, CEO of S olana, a layer-1 public blockchain built for scalability without sacrificing d ecentralization or security. And in particular, without sharding. He was previously a software engineer at Dropbox, M esosphere and Q ualcomm. As for Tarun, he is founder and CEO of the Gauntlet, a simulation platform for crypto networks to help developers understand how decisions about security governance and consensus mechanisms are likely to a ffect network activity and asset value. He previously worked in high frequency trading for Vatic Labs, and was a scientific programmer at DE Shaw Research. We normally have three rounds, opening statements, host questions, and audience questions to r oom w e'll be running most of the show today. The opening s tatements section w ill now consist of scaling related questions, including getting clarification from both debaters about what they mean by scaling the host questions portion will involve questions on sharding and layer-2 solutions. And we'll wrap up with audience questions plus concluding remarks. I will pop in from time to time to make sure key concepts are explained and logical leaps are elaborated upon. Currently our Twitter poll shows that 63% agree that it is impossible to scale without s harding or off-chain solutions. After the release of this recording, we'll also have a post debate p oll. Between the two polls, the debater with a bigger change in percentage of votes in his or her favor wins the debate. Okay. Tarun please go ahead and get started with round one questions to help clarify the concept of scaling.

Tarun:So I think scaling is one of the most, maybe misused or, used in many contexts, concepts in cryptocurrencies and distributed systems. And I sort of wanted each of you to take a couple of minutes to define what scaling means to each of you. You know, in a lot of ways, there are many aspects to scaling: the naive ones are sort of bandwidth and latency. But in the cryptocurrency space, there's also adverse selection and the trade-offs you make for security in order to improve bandwidth and latency. And the real question is, what does it mean for blockchain to scale? And I should point out that it's unlikely, that there exists a unique and strictly best definition that encompasses all properties of scalability, and can be proven to be realizable. But, you know, dream big. You don't need to think in terms of purely practical definitions right now.

Georgios:Well, in my mind, blockchain scaling and scalability are two separate terms. So, scaling is the ability of a system to increase the data being processed. Scalability is how the cost of running the system changes as the scale increases and James Lopp once had said that systems with full scalability have their costs grow at a rate faster than the data that can be processed. So in my mind, blockchain scaling and scalability, describe a constrained optimization problem where the objectives are to maximize throughput, the transaction per second, while minimizing latency, the time until a transaction is confirmed, with two constraints. One constraint is maintaining the ability to sync and keep up with the chain as it grows, which is, you would call it the public verifiability of the chain. And also while maintaining a reasonably big set of block producers, which is the censorship resistance property.

Anatoly:I think my definition isn't too far off of what Georgios said. I think the way I always frame it is that, when we talk about scaling, from an engineering perspective, but really to me, like it's always like the Shannon Hartley theorem. And you're trying to increase the capacity of the channel, and you have the bandwidth and noise and power and software, and most hardware, you don't have any noise. So the only thing you can really increase with bandwidth, and that means adding more cores, adding more pins, adding bigger pipes effectively, and that's how we increase capacity. And sharding is also one of those mechanisms where you're effectively increasing bandwidth, because you're not duplicated to networks that are processing stuff in parallel. And to me, the question is, is not do we increase bandwidth or not, It's where you do it. And sharding in my mind is the wrong spot to do it, because it's increasing bandwidth post-consensus. And why I think that's the wrong spot is the key point that Georgios brought up, is that the thing that we're trying to build first and foremost is censorship resistance. And to me that means maximizing the number of participants in the network that, maximizing the minimum set of participants that could censor the network. So how would we do that? I think the best path to do that is hardware because hardware is one of those ridiculously cheap resources that just keeps growing, getting bigger and faster. Because we're, you know, we're standing on the shoulders of giants. By that, I mean, TSMC, NVIDIA, Intel, Qualcomm, everyone else that's in the space, Apple, that just keeps investing, pouring, you know, hundreds of billions of dollars into the hardware side of things.

Tarun:Yeah, I think there's definitely a lot to be gained from the fact that there's been the world's largest R&D effort in history since the sixties and seventies towards a lot of our current hardware solutions. However, there's not really, you know, I think there's no one size fits all solution. You know, we can compare software versions of scalability, such as, you know, kernel modules in Linux, like SMP or I guess the migration to Linux 2.6. And we can also consider completely new architectures say like the Xeon Phi. And we get very different scaling properties. And in some cases there's a big benefit to not having a Turing completeness, or the ability to do any calculation that you want within these systems. So, you know, what, what types of tradeoffs do you view as the most important when defining scalability? There's sort of this resource versus scalability tradeoff, there's also this computational complexity versus scalability tradeoff of, do I really need a Turing complete language or not. And then there's also sort of a notion of, what you consider necessary and/or sufficient for a scalable system. So the question is, do you think that, A, blockchain is handicap themselves by trying to find the most generic scaling solutions? And B, how should they handle the tradeoff with resource minimums?

Georgios:With respect to Turing completeness, first of all, I think the main issue which Ethereum learned the hard way, is that the Turing complete virtual machine combined with the stateful account model can be problematic. And the typical issue with having both states and Turing completeness, is that each user's transaction must take a mutex on the whole system. Because a transaction may touch a large amount of state, and you cannot know which parts of it it will touch, until you execute it. If you remove, however, or restrict Turing completeness from the system, and go for something more stateless, which to the best of my understanding is what's going to happen in ETH 2, with stateless execution, is you get something similar to the UTXO model, which is very parallelism friendly, where you're able to distribute the workload across transaction, but do not touch overlapping memory regions, while still having a relatively expressive language to define your spending policies for your coins. So I think that the initial Ethereum approach was fine, but now we're backpedaling to a more, performance system, which is a big limit.

Anatoly:I need to give up Turing completeness to have stateless execution, right? Like the memory, like the only thing that you need is transactions that specify which memory regions are you going to read and write, which is how Solana was built. And this is how hardware was built from like the last 30 years.

Georgios:But what if a transaction, what if I issue a transaction which wants to touch a very large part of the memory, suddenly you get the bottleneck. For example, if I have a cross contract call, which does a flash loan, then executes a transaction on Uniswap, then sell the rest of the money on somewhere else, and then re-pay the loan. You will need to allocate huge chunks of memory,

Anatoly:But that's part of the cost model, right? So that particular transaction that needs to touch those segments and memory pays for the memory that it's touching. And that's fine, right? Because the rest of the system, all the peer to peer payments between users don't care, they're executing and different cores, touching different parts of the memory. Still at the end of the day, there's some destructive memory update that creates a sequential ordering of all the stuff that's executing, right? So that the right lock has to exist somewhere. And you don't need sharding because you can create those write locks on demand, at per transaction instance. In fact, what you described is why we don't need sharding. It's because, when every transaction ahead of time knows exactly where it's going to read and write, the only thing we need to do to increase capacity is to double the number of cores. And I'm not sure how the execution model, that what you guys mean by stateless in ETH 2. What we mean by stateless is, the code is separate from the state. So the code itself is, position-independent code, right? It's PIC, that means that it has no writeable globals. So this code, you can think of it as a kernel on a GPU. You can load as many transactions as you want, and they're non-overlapping states, that they're going to read and write. And execute that single chunk of code across a bunch of these transactions in parallel. Our particular byte code is designed for that kind of execution because it does not have a stack pointer.

Georgios:What do you do though, if two transaction, so let's say we have an order, in an order book and two users want to take that transaction. Won't they allocate memory for the same piece, and you get race conditions?

Anatoly:The block producer decides which one goes first, just like everywhere else. The first one runs does its update. The second one runs and that one may have an error out, but it doesn't matter. Right? But the end result is that that particular write lock is localized to that particular bid and ask, and all the other bids and asks that don't care about that, can run an execute in parallel. So you have contention in serialization, around isolated parts of the system,

Georgios:But how can you know that the transaction will not touch? So, you know, I issue a transaction. How can you know that the transaction will not touch pieces of memory, that the other transactions need to execute? What I'm saying is when you're, I said earlier that...

Anatoly:Because it tells me ahead of time...

Georgios:Right. But it tells you ahead of time, but you need to, quote unquote, trust it, that's it's going to indeed only touch these pieces of memory. But what if I say I need this memory. And then, I end up touching some other piece of memory?

Anatoly:That load will fail, right? So the way at least we've implemented is very much like a page load model on a CPU, right? You load a set of memory that your process doesn't have access to, you get a fault and this thing bails out. So the transaction ahead of time, effectively specifies which regions of memory it needs. And if it tries to load something that wasn't preconfigured ahead of time, that load will fail, or that store will fail, if it tries to write to a read-only segment.

Tarun:This brings us to one actual point that is relatively important in this debate, which is you have to rebuild a lot of basic primitives, like sbrk(), malloc(), free(), etc, in the way you guys are talking about it, except you have to assume a very different security and threat model for this. In particular, these page faults, the invalid invalid state from a page fault could be caused by a censorship attempt, or it could be caused by a malformed transaction, or it could be caused by some other consensus policy. And in some ways, these memory constraints have to interact with other components. And this brings us to a point I think that both of you have pretty strong opinions on. But I've noticed that Anatoly, you often bring up weak subjectivity on Twitter. Firstly, could you for the audience define weak subjectivity? And then secondly, can you explain what its relationship is with regardless of scaling? I think there's a very interesting thing about weak subjectivity, at least based on where you guys were just talking about, is that you also have to make sure that these malloc()s and free() s interact fairly, with these subjectivity boundaries and checkpointing and stuff like that. So, you know, maybe Anatoly, if you want to talk about that, and then Georgios, you can respond.

Anatoly:I think that is like almost the crux of this whole argument. And it's like a really interesting thing. It's almost religious at this point, right? So to me, what weak subjectivity is, is that I can't infer from the data, the security, and the security effectively of the state. And what I mean by this, is that, when I look at Bitcoin, I can look at the ledger, and because of proof-of-work, and because of the entire audit trail, I can effectively compute the security of any particular, unspent Bitcoin in that ledger. Without actually talking to anyone else, I can estimate based on my understanding of the cost of electricity, right? And that, that to me is a strong assumption that I can make about security. But we haven't figured out how to make those systems fast while preserving that kind of strong, measurable security. So every kind of tradeoff that we make is tradeoffs towards this weak subjectivity side, where instead of looking at the data, now look at the network itself. And from that, I can see based on the data that I have, plus my observations of the network, let's say there's a thousand nodes that make up a minimum 33% to censor. And then I can say, okay, I verified the data. I verified my account when they're verified, the network has this many participants in this MPC for a consensus. And at this point I trust it, right? Kind of trust in first use. And as long as I can maintain my connection and receive updates from this thing long enough to where I don't have to redo that I can continue trusting it. And that part is not hard, right? This is based on some initial examination that I make. And then I establish trust and then it continues running, that has totally different security properties. And I believe this is what Vitalik means, but weak subjectivity is that, and I've been using that term to mean that, but when you first observe the network, you have no way to really establish any hard security bounds on it. You kind of trust it. And then based on that trust, you then continue using it, and then using that initial assumption.

Georgios:Yeah. So in my mind at least, the issue with weak subjectivity is exactly what you said, that if you go offline and the validator set changes, then there's no way for you to determine objectively what the latest validator set is. And so you need to ask a friend to give you the latest one. But in the Solana context, at least my expectation, is that when you talk about weak subjectivity, you involve a checkpointing for working around the long/impossible synching times. So if you're producing blocks, you know, at 400 milliseconds per block, and its block has a lot of transactions, the state will clearly get very big. So is it fair to say that, when you have referred to weak subjectivity in the past, you meant checkpointing and getting the state without having missing from the beginning?

Anatoly:Right, so in a network where you have some weak subjectivity, where you have to do this, what you're trusting is the censorship resistance of this initial set that you're talking to. And if you establish that this thing is censorship resistant enough, you can build all these other tools to verify and audit and collaborate, or that verification, that are going to be just as good. So to me, the idea that we are going to step a little bit towards weak subjectivity, but using proof-of-stake, but try to keep all this other stuff, is like a half measure. Like this is where, like the tradeoff, you're not making strong enough tradeoff s to where it's going to be a huge differentiator because you're giving up the most important part of Bitcoin, which is strong subjectivity. And if you're gonna give that up, then the audit trail is meaningless. What matters is the censorship resistance set of nodes.

Georgios:Yeah. So, a nice approach of staying on this is from Matt Bell's company, Gnomic labs where they're building a side chain for Bitcoin built on Tendermint, which irregularly checkpoints its state to Bitcoin. So even if you've gone offline, you can always follow the trail on the checkpointing trail from Bitcoin. And assuming that Bitcoin's proof-of-work still works well, you will know where the latest is. So would you consider applying such a technique on Solana, or for example, every once a day, or whenever you change the validator set, bump the... checkpoint your validator set, and your state to Bitcoin?

Anatoly:What good would it do? Let's say the validator set changes and that the Bitcoin checkpoint is wrong.

Georgios:Checkpoint can't be wrong, because it will checkpoint with two thirds of a previous stake.

Anatoly:But what I'm saying is, what if they diverged right? Who is right? The set of nodes that are running on the network, that all the exchanges are connected to and are trading, sold for money or whatever that hash is in the Bitcoin network? What is the source of truth here?

Georgios:Okay. So the social truth, from my point of view, of course, depends on the stakeholders of their system. So yeah, I see where you're coming from on the exchanges, definitely. But I really don't think that that changes as we saw. You don't want large players to determine the fate of an ecosystem. This was almost done with Bitcoin during the block size wars. And we clearly saw why it was very important for users to be able to run the software. So, this is exactly why I specifically am very, as you said earlier, religious, about the ability to sync a chain and maintain, and be able to keep up to date. Because if you cannot sync the chain, as a user-- not as a block producer-- as a simple user of the chain, you must be able to self-verify what's going on. Then you get into situations where exactly you have given up control to other parties of the ecosystem, and you completely lose decentralization, which if you've lost it, why are we working in this industry at all?

Anatoly:But if the set of the other parties is large enough to the point where, it's nearly guaranteed censorship resistance, then you haven't really given up anything, right. What I mean is that assuming you have a censorship resistant oracle, then you're fine, right?

Georgios:What does that mean?

Anatoly:It means that the network give some weight to what is the likelihood of any node being corrupt. And then what is the probability of the 33% that can control the censorship of the network, what is the probability of them being corrupt or not together? Right? So right now you look at Tezos, Cosmos, it's about six or seven nodes that make up 33%, let's say each one has 50% of being corrupted. Then the likelihood of that network being corrupted as what, 1/2^6, not very large odds, right? Not very small odds, it's 1 out of 64. Those kinds of networks are, in my mind, just so early that we haven't really achieved censorship resistance. And the goal of this whole thing is to build a network that is so scalable, that that set is so large, that even if you considered the likelihood of any one of these nodes, to be like 99.9% corrupted, that you still believe that the network is not corrupted on its own, right. That it's not censoring. And if you can establish that, then it doesn't matter what the audit trail is.

Georgios:So let's take a step back on this. So our subject is comparing the sharding vs the no-sharding solutions. Tezos, Cosmos, Solana are all in the no-sharding approach. Well, Cosmos not so much, if you say that you have multiple chains that operate via the hub. The idea, however, is that you use sharding and layer-2 to limit, or rather, to distribute your load, your state, your chain's growth. This is the objective. Because as I said originally, you want, you have a big constraint. You want to maintain public verifiability for the chain. So the reason why I want to push harder on why sharding and layer-2 and off-chain solutions are required, is that you must contain the chain's growth. If your chain grows, I don't know, like 50 megabytes, or like a large amount every day, there is no way for your users to either do the initial block download, or for them to store the chain, or to stay synched after they have...

Anatoly:How does sharding help you there?.

Georgios:Because in sharding, they're all distributed all over the shards. You scale out, instead of scaling u p.

Anatoly:But they all share the same security model, right? So if I'm a new user and I connect to this network, I don't care if it's two networks at 50 megabytes, or one network at a hundred megabits per second, I still have to verify all the shards and do a full audit check.

Georgios:No, that's the whole point, that if you only verify that they, depends on the design that you're looking at, but generally speaking, if you have the typical design where you have a beacon chain, which[inaudible] enabled for all shards, you just check the beacon chain state, and then you have to convince that no shard is corrupt. And then if you want to sync your state on one shard, because you know, the data that you're using lives on one shard, then you don't need to keep up. You don't need to maintain the state for all other shards.

Anatoly:Right, so that's like almost trivial to do it without sharding. The way Solana is designed right now, all you need to do is verify the system program transactions, because you're effectively make the rest, the other parts of the execution opaque. And all you're seeing is that the balances of the underlying system transfers are valid. If you're excluding verification of all the sub shards, and all the arbitrary executions, those things are doing, and you're telling me that that is good enough, you don't need sharding for that kind of audit trail.

Georgios:Because you're saying that, just make sure I understand what you're saying, you're saying that, in your non-sharded system, you will exclude transactions that involve, what?

Anatoly:So, you can do the same things in sharding, right. Sharding execution without sharding the network and consensus itself, right.

Georgios:I agree on that. So the question I have is, but you're still storing everything. That's what I'm saying. You're still forced to store everything in order to produce a block or, you know, in order to keep in sync, you still need to download everything. I'm not talking about execution at all. I'm just talking about storing, literally like storing the data, basically.

Anatoly:I don't need to download transactions that don't touch the virtual machine that I care about. I have...

Georgios:Yes you do. Because the state has changed in some way. And you need to be able to verify that the state has changed in the expected way, so that you can[inaudible] on the block afterwards.

Anatoly:I only need to verify transactions in and out of the VM, right? So I have just system transfers, which are effectively transactions between virtual machines, right. And then everything that happens inside the VMs is opaque. The only thing I need to do is look at the Merkle root of whatever that thing is doing to, to complete the hash chain verification. And I don't really care about what it's doing inside of that. Right? So my point is that, whatever you're doing with sharding on the execution side, the only thing you're introducing is data availability problems, because I can just as easily run a single set of blocks that organize the data such that you can prune execution, that you don't care about when you're doing the verification, and do partial verification that gives you an audit trail for the ETH or whatever, the gas version of the transfers, and every other virtual machine you can prune out. So, but the thing is, is that, by introducing this data availability problems, you're making that audit trail way harder to verify. And in my view, the reason why we don't need to do this sharding thing is because hardware is going to double every two years. So if you imagine a chain that runs at a hundred percent capacity of a single machine, the maximum amount of time to verify it, anytime in the future, as long as Moore's Law keeps going, it's going to be less than four years, probably in the order of like two, two point something.

Georgios:Let me just push back a bit on the core argument around the increase of hardware efficiency. So let's, first of all, remember that Moore's Law is not a law. And the way that the Gordon Moore framed it was about the density of transistors at which the cost per transistor is the lowest on a circuit. So then the practical implications about Moore's Law is that the number of transistors goes up, the density of the transistors on chip goes up, while the price remains the same. But how do you see this play when currently we are, so now we're saying the 5 nanometer transistors going out in production, and you know, maybe the 3 nanometers is going to be happening, but currently Global Foundries is out of the competition.

Anatoly:Intel is late to the party because they're still doing 7 nanometer productions. And the remaining players are Samsung and TSMC. And the thing is that, as you're approaching, as you're still making chips smaller, the production costs increase. And if you have just two manufacturers in the industry of the chips, the cost is naturally going to increase. So firstly, I definitely believe that you might keep getting a huge increase in compute over time, but that increase in compute is not going to scale well with the cost. So firstly, there's that. And secondly, let's, again, remember that, you cannot just keep making things smaller because you reach physical limits, the limits of physics, you get the quantum tunneling effect, where your transistors will turn on and off on unexpected times because the transistor is too small and they're too close to each other. So you cannot really get smaller than a certain amount.

Tarun:Let me, let me Interject a little bit...

Georgios:Tarun, you're definitely qualified to give more context on this, I guess.

Anatoly:Yeah. It seems very unlikely that Moore's Law can continue. yeah. If Georgios has laid out pretty much all of the reasons that I think... So my counter argument to why it's going to continue for the next 20 years is that, we're going to move to 450 millimeter wafers anytime soon. And not only that, we're going to start stacking these things in three dimensional structures, right. We're already doing some of that. But what you guys, like you said is that transistor counts are going to continue to, the density of amount of silicon. we can put into a single system, it's going to continue to grow. And that key part is what matters.

Tarun:But wait, wait, wait, actually one quick point. I think Georgios' point is actually that the density is leveling off. Because we know that the tunneling probability at, you know, like 500 nanometers is, or sorry at 0.5 nanometers is higher, right? Yeah.

Anatoly:But I don't care about the density. What I care about is how many SIMD lanes does my GPU card come with. And that's going to continue to double.

Georgios:My point- if the density doesn't keep increasing, then your costs start to increase more. So yes, maybe you will get a better box. But what I'm saying is that the cost to acquire the better box will scale in a worse way than what estimating...

Anatoly:In dollar terms?

Georgios:... Yes, in dollar terms, both for consumers, but also for the companies producing them. So I have a chart in front of me showing how the costs for production of chips went on, and 10 nanometer chips cost about like 175 million to develop, you know, develop physical verification, to the software validated. 7 nanometer chips, cost, double that, and 5 nanometer chips cost over than double that. So I think we're going to see some explosion in how this cost evolves.

Anatoly:Every two years, the masks drop by half at least. So while the first generation...

Georgios:Yes, but we are approaching the limit. The physical limits.

Anatoly:And two years later, right, whatever, the 3 nanometer mask costs today, and maybe it's a hundred million dollars, it's going to cost 50.

Tarun:So one thing I would add, though, about mask production is that, you know, all of the etching technologies for doing things like 3D, layered memory, as well as, generating these 3 nanometer chips relies on extreme UV lasers being extremely controllable and automated. And we haven't really been able to make these UV lasers in a manner that has been sustainable. So Intel spent like 10 years on R&D that they basically threw away, on UV, which doesn't get counted in some of these, these R&D numbers that the Georgios is citing.

Anatoly:I'm going to make a statement that basically, if we, as a civilization, stop doubling SIMD lanes, then we're all dead. This is the great...

Tarun:Wait a minute. So this is the Come to Jesus moment for humanity, is that SIMD doubling is over. I don't think that's true. I think you could just have more chips horizontally, which is an alternative. It's not necessarily an ideal alternative.

Anatoly:Okay, whatever, but more chips horizontally is the same thing as doubling[inaudible].

Tarun:It's not though.

Anatoly:This is trivially parallelizable. It's not.

Georgios:Horizontal scaling is sharding. Let's remember that also.

Tarun:Right, this is a great time- and we can now actually just go straight into sharding. Because we took this route of what does scalable mean. What are the components of scalability? And now we've come to the fundamental point of, okay, we can optimize a single thread as much as possible, and get to these kinds of these physical limits.

Anatoly:That's not what I'm saying though, that I don't care about optimizing a single thread, right? We're talking about transactions that have non-overlapping reads and writes. So give me more chips. I can stick them all in one data center rack, and they can do a bajillion things per second.

Georgios:But then it conflates with at least my original definition of being able to have this constraint on the verification side. So let's say...

Anatoly:But you're breaking your own constraints. You're telling me that it's okay for me to only verify the beacon chain. Sure. I have effectively a beacon chain...

Georgios:You do not need to verify the beacon chain, you only need to verify a shard.

Anatoly:So I'm skipping the verification of all the external computation to my shard.

Georgios:All the external computation Is guaranteed to be correct, assuming that because you're running a two phase commit protocol, whenever there is a call shard transaction. So any state chains involving your shard is guaranteed to be correct.

Anatoly:But zero knowledge proofs are economically guaranteed. Well, whatever you want, I'm not going to design the system now, but yes, either you could use some optimistic game, again with some f raud proof and some data availability scheme with the ledger c odes, or you could use a zero knowledge proof to do that, to prove that the execution i s correct. Yes. You c an do either. Without going into zero knowledge proofs, if you're using some kind of economic guaranteed scheme on external stuff, then your verification of your single shard transactions buys y ou zero security, absolutely zero, over just, doing TOFU and connecting to a giant Solana network.

Georgios:I am willing to consider the point that if you're using optimistic games, you do not have any security guarantees until the timeout for the whatever operation you're doing, passes. And, you know, in order to have reasonable security, the timeout has to be large. And if the timeout is large, you lose the whole scaling property that you were looking for. So I can consider that subpart specifically for the discussion, but I'm not going to consider the fact that in this sense, the sharding helps you reduce the load on your box.

Anatoly:The reason you're sharding, right, is because you have some load constraints. You're saying my network cannot exceed a committee size of 200 per shard. If you design your network, design the actual architecture of the code, like the stupid horizontal scaling, such that each one of these validators can just double their hardware by using two boxes each, right, then that committee can grow to 400.

Tarun:So I actually, I actually think that one of the things that's actually being conflated here is that, the execution on each node, which could be very high throughput via hardware versus the execution post-consensus, like e x p ost consensus. I think that, Anatoly's point is that the execution per node can continually keep growing. And Georgios' point is that the ex-post consensus throughput can't keep growing without splitting data up. And so before we jump into why these two aspects also have different economic consequences, which you both just indicated, in the sense that if you have these optimistic things, designing these exit games is quite difficult. On the other hand, in the singly- threaded world, building a perfect economic model for fees is extremely difficult. But let's take a step back for the listener, and maybe answer a couple of questions. Which applications of smart contracts require high bandwidth? So in some sense, the ones which are the applications you think that will have these somewhat data localized scenarios, where sharding could work really well, and which ones require low latency, where sharding is just fundamentally unable to provide this value? And how do you rank these applications? Because I think that might give the listener, end users of these systems, a little more of an idea of like the tradeoff from a high level.

Georgios:Yeah. So having multiple merchants, each merchant can very easily have their own separate state, and you can very easily, separate it in non-overlapping pieces of memory, which again, this can be done very cleanly, both in sharding and in non-shared environments from a performance perspective, again, not from a verification after consensus is reached. But an application which would require to bundle up more states, would be something like, trading, where you ideally, as Anatoly pointed out, I'm willing to take the hit here, that, when you have sharding, if you have an options contract on one shard, a futures contract on another shard, Uniswap on another shard, and so on, and you want to make a transaction that involves all three, because you want to take some combo position or you want to do some arbitrage or something, then the system that you're trying to use is not too sharding friendly. So I would expect that if you're doing some super composable activity, that these apps should indeed live in similar shards. However, applications involving payments, which is involving payments in localized, literally localized regions. Imagine on the country on a regional level. They definitely could live in separate shards because how often, for example, some users on one city transact with another. So on that end, I do think that, there's a good application here. And, both from a high bandwidth perspective and also from a latency perspective, latency, I view it as a cherry on top, that, it just improves your user experience. Because you, as a consumer, at least you instantly see the check mark that your transaction goes, instantly included, which if I understand, Solana is very good at.

Anatoly:I'll make an outrageous statement. I think the key part that is necessary, the key application is censorship resistance. It's the number of nodes that decide what goes in and what goes out of the network. So if you have, i magine Binance on one side of the network, Coinbase on another, if t hey're in separate shards, right. You now have two different committees that get to decide flow between any two of these major financial institutions and take arbitrage opportunities, and potentially block some side of flow versus another. This isn't a network that's going to be like, that's going to replace the vampire squid, right? Does that make sense? Right? We're building a financial software fabric, that's supposed to eat, software eats the world, eat the rest of this regulation based space, that banks and people and paper and governments have built. If we have networks where it takes a very small committee to start controlling flow between any two entities. And when y ou shard, you actually split your committee, right, you're reducing the size...

Georgios:But you start here with an assumption. So here you started with an assumption that the initial committee, the initial participants in the initial block producing participants can be easily corrupted. How do you start with that assumption? And if you start with that assumption for the sharding system, that should also apply for the non sharded system.

Anatoly:Exactly. So in the non sharded system, where the benefit of an non-sharded system is that, we can take money and increase censorship resistance, that we can take dollars and pour[inaudible]. And that increases essentially resistance because the size of the committee can grow.

Georgios:Yes. How does that not apply to the sharded environment?

Anatoly:Because you've just split your network, right? You've decided that your sharded network now has...

Georgios:No, I shuffle my validators regularly. And I guarantee that, according to some probability distribution, my validators would not get... that would be no time that if the beacon chain's validators get assigned to shards, that the shards' validators are more than, you know, some percentage corrupt below the safety threshold.

Anatoly:R ight. So that is assuming a static corrupted network.

Georgios:Yes.

Anatoly:Right. But the reality of the world is that when this stuff g rows, right, corruption doesn't become static. It becomes dynamic, because corruption itself is a feature. Whoever the validators are, if they can control the flow between major financial institutions, that itself becomes miner e xtractive value, right? And for us, for this system to be fair and transparent, it needs to be effectively at the capital costs of miner extracted value, right. That the m iners are effectively working as o ur market makers, or trying to extract whatever they can out of this financial infrastructure. But by doing so also increasing the censorship resistance because there's more of them competing for it. If you're limiting that, right, if you've come up with some hacky way, I don't want to say h acky, sorry. If you come up with some purely random way to split this up, and you're not creating smaller groups, right, take that to the limit... You will have one validator at Coinbase, one validator at Binance. Even if they're dynamically assigned and reassigned at random, there's going to be some potential for them getting corrupted, right, once the flow becomes large enough.

Tarun:So actually this brings us to another important point, which is related to the economics of transaction fees in sharded versus non-sharded systems. Georgios has spent a bunch of time recently investigating some of these proposals. Maybe you want to give a little background on that, Georgios.

Georgios:So recently, Ethereum has had a few interesting proposals for its block space market, namely, so currently the way transaction fees in Ethereum... the block size currently in Ethereum is set by the miners. It was 10 million gas, which is something like 30 to 50 kilobytes, a few weeks ago. Now it's a 12.5 million gas. But this new proposals specifically EIP-1559, EIP-2593, they have a way to introduce a gas price oracle on the system, which is achieved in EIP-1559, by introducing a fee burn to the protocol. What does this mean, is that if the chain is congested... rather, in order to have a good gas price oracle and congestion oracle, you need to have a metric of organic demand for block space. Currently you do not have a good metric for that, because the transaction... If I'm a miner, I can spam the chain with my own transactions, and then I will get the fees back myself. So what EIP- 1559 does is that by introducing a fee burn, it completely removes the potential for such a mode, which means that we finally have a way to know how congested the chain is. This has an interesting implication for sharding, because if you can know how much congestion exists in the system, you can dynamically add or remove shards. So if you see the system is congested and it's going, the congestion is going up, you're saying here's one more shard to the system. And now you assign some new validators to that. Or if the system is not congested, you remove the shard. And all the validators, which were previously in the underutilized shard, they get spread out in the other shards.

Anatoly:How long is it going to take for you to add or remove a shard? Time? Just at least one beacon round sync, probably more.

Georgios:It should be one round. It should be one round. Because, ultimately, if you think on a technical level, how this is done, per round, let's say per shuffling period, the beacon chain says, Anatoly, you go to this shard, Georgios go to this shard, and Tarun goes to this shard.

Anatoly:These are state shards, right?

Georgios:Pardon?

Anatoly:So the state has... these are state shards. You still need to re-split the three... You basically have to like Randall hash the state or something, and like rejigger all the applications?

Georgios:You mean.. Sorry I'm not following.

Tarun:So one of the reasons I wanted to bring this up was, this sort of elastic box size and demand driven thing is in some ways, potentially a way to avoid this two-parties-controlling-everything system where you end up in Anatoly's limit of"Coinbase owns one shard, Binance owns the other shard." And they're both trying to commit MEV style attacks against each other.

Georgios:Right. Because everyone would see that they would stop transacting on that shard and the system would then deallocate[inaudible]...

Tarun:Exactly. So I was curious about both of your personal opinions on, whether there exists economic fee models that can get around some of these issues.'Cause Anatoly has a very good point of, if say the number of validators doesn't grow as fast as the demand on the network, then you sort of have this concentration of wealth behavior, where certain shards will be the rich shards and certain other shards will be the poor shards. However, it's possible to do some type of fee redistribution or subsidy redistribution. That's sort of a little bit of what you were looking into, Georgios. So that's, maybe I want to hear both of your views on whether it's actually possible to do such a redistribution, to ensure that, the validator pool grows organically with the demand pool.

Anatoly:The way we've designed Solana, which is horizontally scaling with hardware, the fees are only gonna... they are already like 10 bucks by the million transactions. And they're only gonna drop because they're priced based on egress cost. It's 50 bucks a terabyte right now of egress. That's 4 billion transactions split amongst all the validators. Anything above that, means that you are designing something that is extracting value where it shouldn't, right. That is the cost of the data of actually sending that thing through. So when you split your system and you add all this complexity, and you shard it, your fees for the user actually go up. You look at Ethere right? They've artificially limited their block size. And therefore the fees for the user are much higher because you can only stuff so many transactions in a block.

Georgios:By design.

Anatoly:Exactly. Okay, great. But what does that design buy you? But you can, sometimes...

Georgios:Design buys you good settlement assurances about the transaction that you're making. It guarantees that this transaction will be included and it's solid, it's included and you know that it's not going to get reverted. And you also have the knowledge that, at a later point in time as a user that is joining the system, you can be sure because you have verified the system's state, but it's good enough that...

Anatoly:You've only verified a single shard state, right. You've decided that that enough of strong subjectivity is okay, but you can be weakly subjective about all the other shards, and use whatever economic games you want.'Cause I can do the exact same thing, right. In a single sharded system, I can bifurcate the data. I can verify the main chain of hashes, and all the system transfers and ignore all the other virtual machines and just look at mine. Right? I'm not getting anything out of the sharded system, except lack of data availability and higher fees.

Georgios:I'm not convinced about that point. So why do... so firstly, why do you think that you have higher fees in the sharded system and why does the sharding system also have data availability issues? I understand the basic argument around the validator not releasing the data, in an adaptive... So everything I'm saying, it's with a static adversary. I'm not willing to debate dynamic adversaries because that's out of the scope that I'm arguing for. But in the context of, any kind of data availability attack can be mitigated either with, by not using basically, by not using the optimistic games, but using the zero knowledge proof ones, or by using an erasure code, which you ensure that gets the same data across the network, which you surely know how it works because that's also how your block propagation protocol works.

Anatoly:Correct. But if you have to download the data from all the shards, why even bother? What am I getting?

Georgios:Well, you never need to download the data from all the shards.

Anatoly:Then I never need to download the data from all the other virtual machines that I don't care about. I have my Anatoly token, it runs my VM. I look at only the transactions related to the Anatoly token and I verify it. And I'm done.

Tarun:So you guys have actually brought me to a good point, especially with regards to data availability. It is probably the biggest pain in a lot of crypto systems. And that's significantly worse for sharded systems, because there's added communication and routing complexity. And there's a sense in which the UX is quite different from user to user. And I think it's pretty clear that the single shard system just has much better UX, but what do you view as the advantages and disadvantages to each side, given these complexities? And, while there is certainly issues with sharding, the UX globally for the end user, I think that in some weird specialized use cases, I think we've seen this with a lot of the Layer-2's on Ethere people actually have had much better UX for their applications. And I would like to hear how you guys both view the pros and cons of sharded UX vs non-sharded UX.

Anatoly:In layer-2, you're giving up some security for that. You're saying that I have some central server, and maybe it's rolling up all the transactions to the main chain, that can be challenged later and that's running on one computer and I get to evaluate my state. So you've given up a bunch of security and locked up your tokens effectively at a centralized exchange that has a public audit trail, which is a huge improvement.

Georgios:No, that's most definitely not true though. So that was true in the original Plasma designs for the data availability concerns, because, in the Plasma, you had an operator, they were getting transactions and they were submitting the Merkle roots of the transaction on-chain. And then you will use a Merkel root to withdraw your money back to the main chain. In all of the designs, what we figured out was that Plasma was a pre-mature optimization, and you should just solve"data availability" by dumping all the data on chain. And what this allows you to, is that you can have multiple block producers. So earlier you said you have one block producer. That is for sure not true, because you can have multiple block producers picking up from what the previous block producer left, because they can always reconstruct the state.

Anatoly:Correct. So I'm not disagreeing with you. What I'm saying is that, in the application itself, when it's loaded and that optimistic roll-up is running, let's say I'm running a DEX, and the DEX has an order book inside the roll-up, who is deciding which orders get picked, not the Ethereum block producers, right. But whatever's running this optimistic roll-up.

Georgios:Yes. Whoever is going to be the block producer for that block. Yeah.

Anatoly:For that roll-up itself. Not for the...

Georgios:No, for that block, for that block. And the roll-up, the idea is that anybody can take a bunch of transactions and publish them on the roll-up block, as long as they build on the previous hash. And they submit the valid data transition.

Anatoly:So, whoever's doing that, that's the censor for that particular roll up.

Georgios:No, for that specific block of the roll-up, what I'm saying is that because anybody can be a block producer, you have free entry to the system. So if anybody can enter the system, in equilibri the system will be censorship resistant, because anybody is able to bundle up transactions and confirm them on the main chain.

Anatoly:Okay. And that ultimately leverages the censorship resistance of the main chain, which gets to decide between two people who are trying to get free entry and attach to the roll-up.

Georgios:Yes. It ultimately gets decided, you leverage the security of the proof-of-work chain. I prefer it to be on the proof-of-work chain. Yeah you inherit the security from that. So you don't have less security that you said before. Minus for some synchrony assumptions where the miner, for example, could delay all your transactions for a week. But if you're, but if a miner is consistently censoring transactions for a week, it's likely that the whole proof-of-work chain is corrupt.

Anatoly:That is effectively like a single sharded network, except...

Georgios:But you can have multiple roll- ups running at the same time. And you can have roll-ups running on sharding. So that's the idea.

Anatoly:But why do you need the sharding part?

Georgios:So this is a good point. I've argued multiple times in the past that, having multiple Plasma chains or roll-up chains or whatever, attached to the same layer-1, is almost, it's very close to a sharding design, because again, remember, you can think of it that each of the layer-2s is a shard. And the main chain is the beacon chain. So the architecture Is very similar. So architecture is very similar, but what I'm saying is that they're not mutually exclusive. You can have both,

Anatoly:You don't need sharding side, right?

Georgios:So that's why I'm not arguing only for sharding. I'm also arguing for sharding and off-chain. That's the original point. That it's both sharding and off-chain. I want to reduce the on-chain footprint.

Anatoly:So the nice thing about about optimistic roll-ups is they effectively segment memory, right? So now you have transactions that don't overlap, and you're rolling them all up into the censorship resistant network, and it can execute all of that stuff in parallel optimistically, without waiting for these challenge games. And now you have atomic state transitions between all the roll-up states, because the censorship resistant part of the network has already executed all of those state transitions, and knows exactly what the bits are. And that's effectively...

Tarun:Can I get a quick question related to this? Do you view sharding and off-chain layer-2s as the same, or do you view them as very distinct in terms of the security model?

Anatoly:Oh, very distinct. And the part of the sharding that I think is bad, is that when you're splitting the committees, that are deciding stuff, you're splitting your capacity of the network. You're saying that I now have committees with less hardware, with smaller bandwidth, that are now trying to do twice as much work, because they're working in parallel. But the fees for the user are based on the spam resistance of those sub networks, which means that those fees are gonna be higher than if you combine the hardware and have twice as much capacity, because capacity of the actual throughput in any given second is what's gonna drive your fees. And not only are you splitting the capacity, you're also splitting the security in that dynamic attacker sense, because now I have two shards. And if I'm trying to send money between both of those shards, I have two things that get to decide who goes first. And that's the part that I think is fundamentally, is going to be a blocker for moving the Bank of America's, the Visa's, the Goldman Sachs to a single giant financial ecosystem.

Tarun:Yeah. So I think fundamentally it does seem like one of the big disputes here is actually that, off-chain and, sharding solutions are just viewed as completely economically different. And one of the things that I think is unique to Solana, that we haven't quite covered, which does actually in some ways interface with how Solana would service a layer-2 blockchain is the proof-of-history component within Solana. So, as far as I understand, it's sort of a rough approximation, but not provable approximation or verifiable delay function, which is a function that you can basically, parallelize. You can prove that even if you parallelize the compute of this function, it still takes a certain amount of time to evaluate. How much do you affect that, the usage of proof-of-history and the verifiable delay functions, approximations of verifiable delay functions, affect your security model? Especially if proof-of-history has to interact with the layer-2. And if it turns out that proof-of-history is not exactly a VDF, shouldn't there be a lot of forking, which will have similar issues to sharding UX-wise?

Anatoly:Yeah. So the security side is harder for me to actually analyze, because here is the crux, is where we have, asynchronous safety. It means that the network doesn't decide on the block and then go to the next block. We are optimistically attaching blocks to what we think is the right fork. When you have asynchronous safety, you're effectively giving the attacker more information about the state of the network, and if they can withhold a bunch of the blocks, and withhold a bunch of the votes and censor that could potentially create, or forks and cause rollback. And that rollback is, while it's not going to cause a double-spend on an exchange, it may leak information about trading intent or something like that. If everybody's waiting to trade and all of a sudden, they see that these trades are going the other way from other block producers, and I'm able to roll back that part of the chain, effectively that delays finality. So we can do a bunch of tricks where that doesn't delay finality, but the security side there, isn't... The proof-of-history, what it gives us security is that we can force the attacker to delay some real amount of time before they can produce a block. And that allows the rest of the network to actually come to finality on what they're building on. And the attacker can't do it ahead of time because that forced delay is what's slowing him down. If they can speed up their block production, then they could potentially create more forks and do a denial of service on the network. So that is the tradeoff. And I don't know how real that tradeoff is, because as you guys have said, we're hitting the limits of our 3 nanometers, right? Going from 5 nanometers to 3 nanometers is going to be like, what$8 billion. Going from three to whatever the next step is, is going to be some absurd amount of money. And the speed of the VDF is going to approximate the fastest possible implementation that you can build, especially for our very, very dumb SHA-256 based design, really, really quickly. So an attacker is going to have a very hard time getting more than 30% speed up from cooling or something like that.

Tarun:Yeah, that definitely makes sense. Georgios, do you have any thoughts on how a proof-of-history like system interacts with layer-2s or whether that affects the security?

Georgios:Yeah. I will describe from the layer-2 side. So all I want as a layer-2 mechanism is to have somewhere where I can reliably adjudicate my disputes. So earlier, I said that I would prefer to have a proof-of-work chain for the reason that I can look at the transaction, wait for it, to have enough confirmations. And, I'm guaranteed that this transaction is final. So in the roll-up case, I can know that, this checkpoint that I used for some transaction is going to be valid and I will always be able to withdraw from it because Nakamoto consensus is simple, now that we understand it, What I'm concerned is what you said about the proof-of-history. That what if there's a fork and then the probability, let's say, of one of the forks being discarded in favor of another one, is not, easily determined. So in improve of work it is determined by knowing by assigning some probability of some attacker hashpower. So what I do not understand, is how do I know how likely a long fork is to happen.

Anatoly:I mean it's based on the same slashing kind of economic, weak subjectivity thing, in every proof-of-stake network. So if you're okay with...

Georgios:Could you elaborate?

Anatoly:It's a deterministic system, right? So there isn't some probability of a fork switching or not. There is a possibility of you observing part of the network with some set of votes that are corrupted, and therefore coming to the conclusion that we have finality, when in fact there's an attacker that's double voting and doing a safety violation. So that kind of attack, there's no way to know whether it's happening or not, unless you have complete view of the network. And the only defense against that kind of attack is slashing.

Georgios:And also in an asynchronous network. So I'm not sure what the Solana assumptions are, but if you are in an asynchronous network and you haven't seen somebody vote, you cannot tell if they haven't voted or they have voted or somebody is delaying their messages. Right?

Anatoly:Correct. But as soon as you see people's votes, you have deterministic assumptions about whether this block is final or not. and if you see the votes, the only other option is that this block is final or somebody needs to get slashed. So, if the amount of slashing that is reasonable enough for you to be okay with, then your application can move forward. And I think this goes back to the weak subjectivity thing that we're talking about, right? All of these networks that are proof-of-stake networks rely on some amount of slashing or economic punishment. They're not hard entropy that's converted into zeros...

Tarun:For reference, Gün would be very angry to hear that.

Georgios:He has been in the past.

Anatoly:Making an even weaker assumption, saying that they don't need slashing because the price of the token will drop if the network is misbehaving, which to me is, even going further to the weak subjectivity thing.

Tarun:Weak squared subjectivity.

Anatoly:Yeah, exactly.

Tarun:Okay. Before I think we are getting close to time, so I want to make sure Richard can get in the audience questions. But before that, I just want to give one last question. I think we covered a lot of things in terms of the sort of crypto economic security, within looking at how fees change between sharded and non- sharded systems. But one of the big issues with cross-chain and sharded systems themselves is that the cross-chain transactions or cross-shard transactions tend to increase the economic crypto economic attack surface area quite a bit, because..

Georgios:Sorry, can you repeat that?

Tarun:The cross-chain transactions in sharding systems like cross-shard transactions, they tend to increase the crypto economic surface area quite a bit, because you now have to reason about the cost of a program that has a mutex, or a move to new thread, or a halt, or some of these operations that in the Solana singly-threaded world, you don't totally have to think about.

Anatoly:And we've seen in a bunch of live networks, Kasama, Near, and Near's Testnet, where a lot of the really crazy griefing attacks, that really costs users a lot of money, came from people figuring out how to cause deadlocks, and that were economic in nature. Do you think it's actually possible to like patch this very gigantic crypto economic surface area? Or are we stuck with these kludgy exit games and griefing vectors as the cost of throughput?

Georgios:Of course. So the issue is that, when you engage in these attacks, the objective is to degrade the system's performance to a single thread, basically. Do we agree on that?

Tarun:Yes. But, usually with some economic... the transactions aren't free, there's some sort of costs that.

Georgios:Yeah. So, respond to what you were alluding to, there is probably some mispricing, so there must be some, economic simulation, which surely your company is very good at, on cross-shard transactions. So transaction involving, accounts only in one shard should not be priced in the same way as a cross-shard transaction. And I would expect that it should be priced, proportionally, or it should scale with a number of shards it touches. So firstly that. Secondly, the other problem has to do with the fact that all of these systems leverage fisherman, which Anatoly kids because they are a problem created to solve problems that sharding solves. Before I think I just said what you wanted to say before you said it, so yeah, but these problems manifest themselves typically when you use this fraud proof kind of dynamics, where you broadcast the transaction and you need to wait some timeout for it to be disputed, or caught by a fisherman. So, I know most people consider them magic, but I do think that zero knowledge proofs are a very very important tool in that area. Clearly, they've proven times have been getting supercharged in the sense that they've been going down a lot. And with the advent of universal proving systems, systems by which I mean, systems which require, which have a continuous trusted set up and the universal trusted set up, meaning that it can be adapted for various applications without redoing the ceremony, or with STARKs, which do not require trusted setup altogether. The point being that I believe that you can remove all these problems by using zero-knowledge proof, but of course, this is not going to happen with any of these systems right now in their versions. So that's the short term solution, in my opinion, is a lot of attention in pricing, cross-shard transactions, so that there is no asymmetry between the attack cost and the attack benefit, or rather, the performance degradation an attacker can cause to the system. And long term, they should be replaced with some system that does not involve a fraud proof.

Tarun:Anatoly do you want to respond?

Anatoly:What is your estimated price? That for a ETH2 transaction.

Georgios:I have no idea. I have no idea. The transaction is priced by the market.

Anatoly:No, but like[inaudible]. Here's the thing, right? Because we're horizontally scaling before consensus, what we're doing is we're increasing the capacity of messages that the sync can handle, and effectively transactions per second. And our TPS capacity is the number of nodes we can stuff in this network, because our consensus messages are like transactions like anything else, minus some overhead on keeping track of the memberships that have been gossip'd. But more or less, the fatter the pipe we have, the more things can talk over it. so imagine a world next year, iPhone 5G comes out, it's got 4,000 SIMD lanes. It has wireless connectivity that can support one Gigabit moving at 60 miles an hour, talking to one Gigabit, moving at 60 miles an hour on the other side of the world.

Georgios:I hear what you're saying. I hear what you're saying. But honestly. So I'm not living in the US. I have not experienced Internet from the US. But my home connection, the one that I'm speaking to you through right now, is 24 MBPS. And it's not going anywhere higher than that. So of course...

Anatoly:How many transactions can you stuff in 24 Mbps?

Georgios:Clear a lot, but the issue is not just that. The issue is how fast then can these be verified by a node syncing up, if a year has passed and I need to download a year's worth of 24 MBPS transactions per second...

Anatoly:But if you have a censorship resistant network, that's your backbone, you can collaborate on the audit.

Georgios:So you're talking about getting a trusted checkpoint of your state.

Anatoly:If you already have a censorship resistant network, if the only concern you have is the audit, and all you're worried about again, is a full audit of the network, I think it's unfair to say that you're okay with a partial audit of a single shard, that you're dynamically switching somehow, but you're only okay with a single shard audit, right?

Georgios:Let me phrase it another way. I prefer systems that are opt-in rather than requiring me to opt-out. So in this systems that I am describing, I can always do the hard thing, and maybe I can fall back to something which is slightly less secure, or not secure, but requires me to make some additional trust assumptions. In the system, which you are mentioning, the default approach is one which involves...

Anatoly:It's not, it's not.

Georgios:How is it not?

Anatoly:Because your active 24 Mbps connection is only required for the act of setting of consensus. I can ship you a hard drive that you can verify over mail, right? The audit trail that you're talking about doing a full audit, one, is it's not necessary for you to agree on the current state consensus, right? The second part is that for some reason, you need a full audit of Solana, but you're okay with a partial audit of a single shard on ETH 2, even though your validators are supposed to switch shards dynamically, which is I think, bunk, like totally bunk. If you're going to talk about a full audit, then you need to full audit of ETH2,, right? Because there you're making a whole, hell of a lot, many assumptions about the security of all these crypto economic games between when you're switching shards. So the full audit argument, I think is, it's not a very strong one, and it's the easiest thing to optimize. I can literally send you the data, right? And once you have the full, entire set of the data, you have so many opportunities for parallelism, all the signature verifications can be done on GPUs. Even if we had EVM as our virtual machine, we could mark all the memory that every transaction touches. And then you can verify in post-processing. Once you have the data set, and you know exactly the sequence of events, and the rest of the world can help you collaborate and pick the fastest way to verify it. That part is a much easier problem to solve then, we're hyper-connected right now, and we need to get finality as fast as possible across the world.

Tarun:Yeah. I think, you know, I think we've covered a lot of ground here and, you know, yeah.

Anatoly:We need more beers for the next time. There's definitely, I think room for a round two in the timeline.

Georgios:I think we are reaching each other. So I hear where you're coming from. I think it's like you originally said that like some points, some fundamental points of disagreement, they are a bit religious, you know..

Tarun:Let me tell you that, these two schools of thought are the two schools of thought that I've seen in computer science forever. Like the people who believe that, you'll just have a bigger, faster machine. And the people who believe that only algorithms will give us salvation. What did Anatoly say?

Georgios:The eternal struggle, the reincarnation... it's time there's a reincarnation of the two eternal enemies. Yeah. This debate is the manifestation of that.

Tarun:I think another interesting thing is, is Anatoly is, the religiou, nature of this was captured in the, humanity is dead if we don't double the number of Cindy's. That was pretty good. It's one of the best quotes I've heard in a while.

Anatoly:if we can't scale vector products, if we stopped continuing scaling vector products, we should all be working on bunker coin. Cause, cause it's very just like economic...

Tarun:Isn't Bitcoin already bunker coin?

Anatoly:No, we need, I'm talking about like mobile collapse, civilization collapse, bunker coin. We need short wave radio base. We need short wave radio based packet networks. Bitcoin is way too fat for that. You need like 10 kilobits every six hours.

Tarun:Yeah. I agree.

Georgios:This is definitely a good point.

Anatoly:Richard. do you...

Richard:Yeah, it's been fascinating. Maybe let's shift gears a little bit and quickly cover two questions from the audience members. And these are sort of tangential to scaling one more technical the other, not so much. So let's start with a technical question. This is from"Spati4l". It seems that the main benefit for sharding is not the scalability, but rather the practical way it provides for devices to restrict downloading non-essential shards, thus saving loads of storage and bandwidth. What are the no sharding solutions to storage efficiency?

Georgios:I agree with this statement and Anatoly the mic is yours.

Anatoly:So, to give you an idea, right, the stuff that keeps doubling isn't just CPU power, right? It's also just storage itself. So if you remember, like you had disc drives that were four megabytes at one point, and now you can literally mail somebody a card, right, that's thin as a piece of paper that has half a terabyte in it.

Georgios:And that was a big part due to solid state discs, right? Because, the hard disks, they cannot rotate fast enough, right?

Anatoly:So the problem of storage is one that is very much solved by many different approaches. Some include erasure coding and massive networks like Filecoin or something like that. But some are very very simple where you can actually ship somebody, they actual data that they need to verify, if that is what they need. And that kind of audit is easy enough to do in a network that has this kind of trust in first use approach, where the first time I do it, I go get this data, I get optical disks. Can ship them. I can verify them locally. Then I trust in first use and the first connection, and I'm good to go. And the verification side, you can optimize the heck out of that, because this is already computed, pre-computed data. At the very extreme version of this, it means that we can generate zero knowledge proofs of all this computational stuff afterwards and figure out how to do that potentially. But at the very simple way where we're just still scaling, but regular old engineering is you can simply mark, and optimistically figure out what all the memory locations that any of this data and transactions are gonna touch, to the point where you can literally mark the physical addresses in the computer, where this memory is going to be in the physical cell, and have this thing run at 100x, than what it takes to play originally. So there's so many opportunities to speed up this process, that I think that for me to say that the cost is always going to be four years is like way, way more pessimistic, because just on the signature verification itself, a modern day 2080 TI can do 3 million[inaudible] verifications per second. It's ridiculous. The hardware is only gonna get faster is my point. And it's already so much faster than Ethereum. Like the capacity that Ethereum provides, that it's laughable that we don't take advantage of it.

Richard:Got it, it sounds like part of your answer still alludes to what Tarun was mentioning earlier, the perennial debate in computer science about hardware versus algorithm.

Anatoly:But you're looking at the asymptomatic side of the curve, when you just look around and look at the trees, right. Ethereum's capacity is what, 11 transactions per second. What is it, a card two years ago, a 1080 TI can do 250,000 signatures per second, right? We live in a world, where what I feel like the sharding solutions are coming from is that they've basically given up on, okay, let's actually make a Ethereum run on current set of hardware, as we have, for the last four years, right? Even at the age when the Ethereum was launched or even like the Raspberry PI4 has, I don't know, about a thousand SIMD lanes on the Nvidia GPU. Let's take advantage of that at least. And then see if we c an actually get users to use the network, right? And to hit t he capacity limits there.

Richard:Yup. Got it. Okay, great. So let's shift gears a little bit. This is the last audience question, and this comes from two people, globeDX and Adam is rusty. So tech aside, what are your views on how scaling will lead to mass market use and creation of a serious alternative digital money? Also, how does either approach unlock end user value that is greater than traditional infrastructure? So this is more of a economic/ business sort of question, slightly different from the tech discussion we've been having. So basically what will we get after either approach gets deployed and seriously scales the blockchain usage.